Generating a Movie Barcode

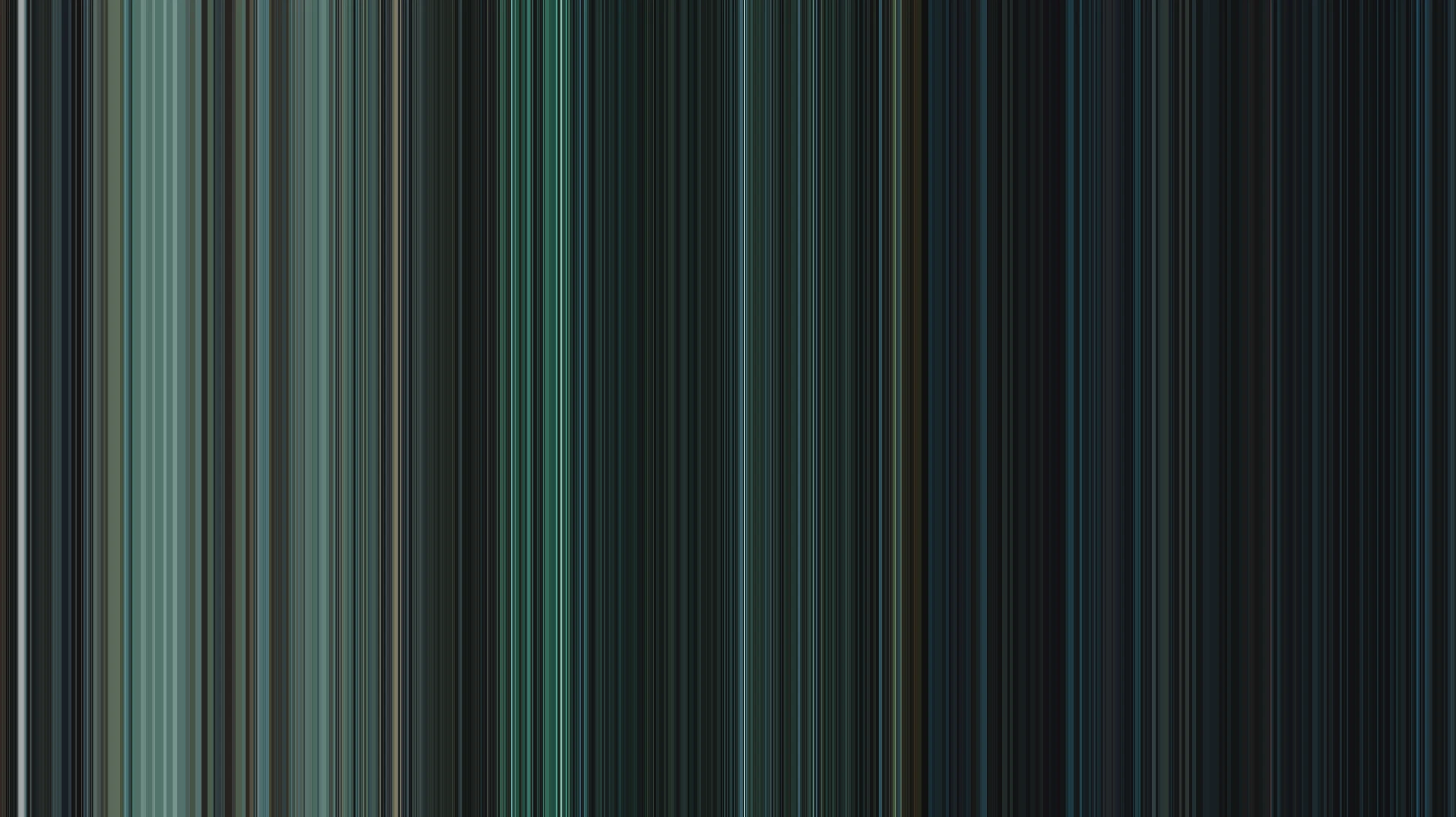

Task: Generate the 'barcode' of a movie. See examples to get a better understanding. Our choice of movie is Fight Club - because it's my favourite.

Preface¶

These 'barcodes' are a pretty old concept, at least as old as 2015 from what I could find. A rather interesting thing I came across was how these could be used for analysis. For example Bollywood films tend to be brighter but Hollywood has more diversity.

My initial implementation idea was pretty straightforward. I Googled some image manipulation libraries and found stb. The next natural step was to save each frame of the film and then run the average on each frame. Now the copy of the film I have is in 1080p so each frame like this one, reaches about one megabyte in size.

So at 24 frames/second, for a roughly two-hour long film becomes $$ \frac{1\text{MB}}{\text{frame}} \times \frac{24 \text{frames}}{\text{second}} \times \frac{3600 \text{seconds}}{\text{hour}} \times 2 \text{hours}$$ $$= 172,800\text{MB} \approx 173\text{GB}$$

... and I hardly have like 30 gigs free on my laptop. I only did this math after my laptop filled up before the film was even a quarter processed. So then I revised my approach to 'stream' and calculate the frames one by one.

The other thing to be mindful of is at roughly 180,000 frames, the merged image becomes way too wide. There are two approaches to this:

- Manually sample fewer frames. I set this to one in twelve frames for effectively two frames per second.

- Dynamically calculate the 'frame step' to see how many frames to skip. An example of this is u/barracuda415's code which calculates this using

step = int(round(num_frames / width / samples)). View his entire script here.

Results¶

Doing every single frame gave a resultant image of 193261 × 1080 resolution - way too wide. So I reduced the sampling rate to capture one in 12 frames. This was actually still very wide (at 16106 × 1080) and I got tired of waiting around so I just cropped the ending to a 16:9 resolution:

The other two resolutions are available to view at the Git repo.

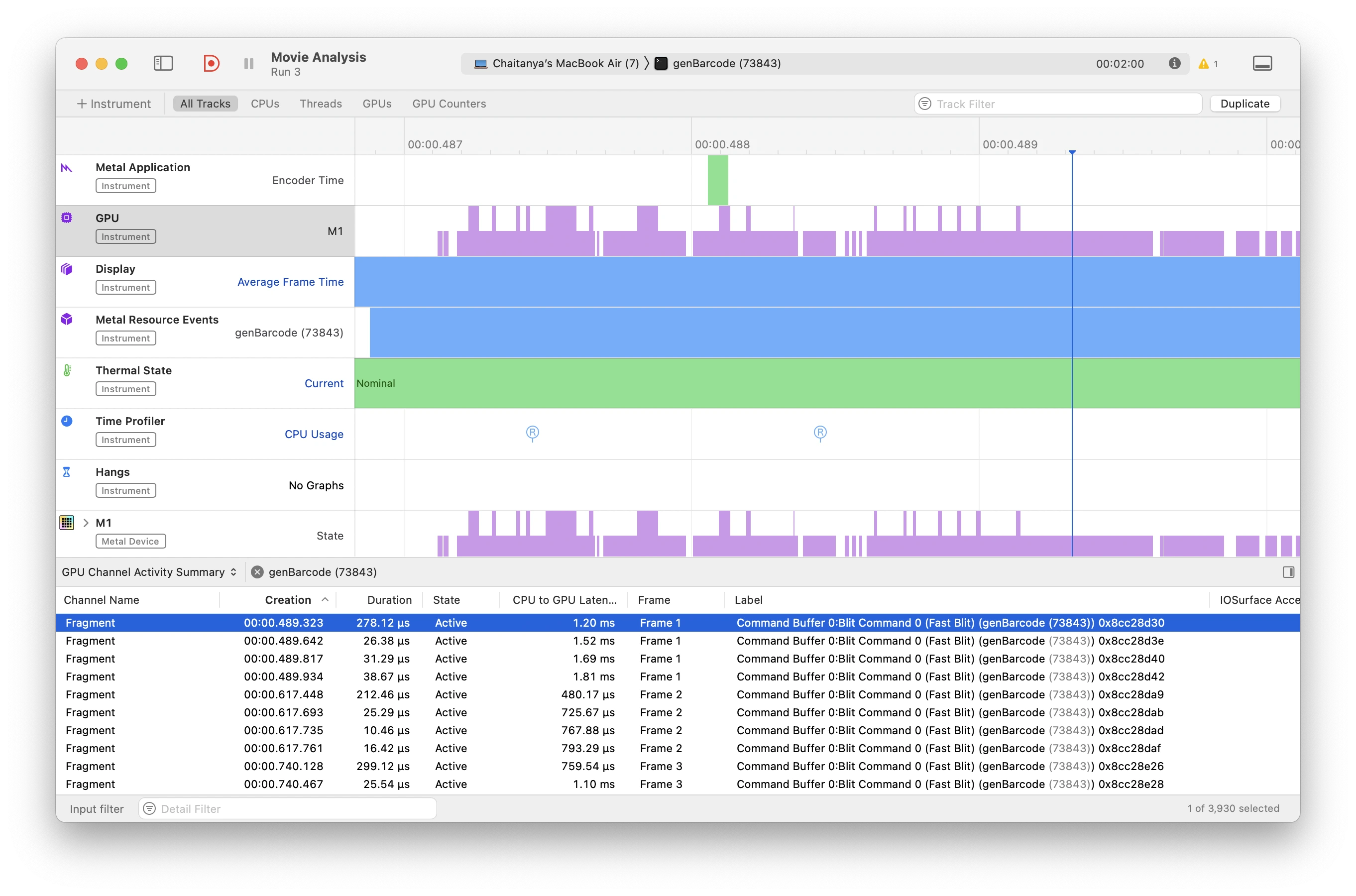

Another curious thing in the profile of this app, I saw four 'Fragment' entries for each frame and initially assumed this must have been the RGBA channels. But then the durations seemed odd. If you see here, the first fragment of each frame takes longer than the rest, consistently:

I then realised that this is actually the generation of the mipmap levels. At each iteration, we call the setMipmapLevelCount(...) method which allocates memory for the upcoming mipmap chain. Then we call the generateMipmaps(...) method which actually does the blitting. My guess for the smaller subsequent durations is that since the resolution of each mipmap level gets smaller, so too does the time taken for the copy operation.

A sidenote for the performance, since this approach is stream-based it's quite slow. Generating the highest resolution image took a total time of 6:32:59.60 - over six hours. I experimented by parallelising this process (as ffmpeg was the actual bottleneck) but I got pretty caught up in implementing the std::map. Perhaps the most performant solution for this would be to use the MacOS built in utilities such as AVFoundation.

Some GPU jargon¶

I'm gonna go over some GPU related keywords that'll help the explanation in the next section.

What's a Texel?¶

A Texel is equivalent to a pixel for a texture. If one texel takes up one pixel, the texture is viewed at its native resolution. In case of more than one texel per pixel, the image is minified and in case of fewer than one texel per pixel, the image is magnified.

What's a Mipmap?¶

A mipmap is a set of textures that represent the same image at multiple resolutions, each level being typically half the width and height of the previous one. One common use-case for these is aliasing textures when viewed from a distance at lower resolutions. A mipmap is stored as an array of Texels.

What's a blit?¶

A blit is either a 'block-transfer' or 'bit block-transfer' depending on what documentation you refer. Blit operations are used to copy (and also often modify) data from one point in the GPU to another point in the GPU. Or even across multiple connected GPUs when required.

A very simple example of 'blitting' is to understand how subtitles are overlaid on a video stream. The subtitle text is rendered as a RGBA bitmap image by the video player, like VLC for example. These are then overlaid onto the video frame before output.

I actually misread this as 'BLT' initially and kept calling it that for some time. This made me constantly hungry throughout this project. Beware of naming your variables so close to something delish, as it could severely reduce your development speed.

Sidenote on Compatibility¶

I came across the DirectX documentation when trying to explore some examples of blits. The similarity in the DirectX API and Metal API to copy texture data was rather interesting to me. This is the snippet from the MS Docs:

void CopyTextureRegion(

[in] const D3D12_TEXTURE_COPY_LOCATION *pDst,

UINT DstX,

UINT DstY,

UINT DstZ,

[in] const D3D12_TEXTURE_COPY_LOCATION *pSrc,

[in, optional] const D3D12_BOX *pSrcBox

);

And this is the equivalent code from MTLBlitCommandEncoder.hpp:

void copyFromTexture(

const class Texture* sourceTexture,

NS::UInteger sourceSlice,

NS::UInteger sourceLevel,

MTL::Origin sourceOrigin,

MTL::Size sourceSize,

const class Texture* destinationTexture,

NS::UInteger destinationSlice,

NS::UInteger destinationLevel,

MTL::Origin destinationOrigin

);

This similarity is well ... obvious. But I found it fascinating how these shared GPU semantics seem to converge.

Then I started looking for differences and found a functional dissection in DirectX. They call them GPU engines which have dedicated pipelines (queues) for 3D, compute, and copy operations. Metal is more high-level where you just have a MTLCommandQueue and there is no manual queuing of objects. This is a matter of design preference but I feel the Metal way is simpler.

Logical Explanation¶

This has actually been one of the simplest pieces of code so far - there's no MSL shader code! The primary logic is in the mipmap-chain, in this snippet:

// number of mipmap levels required to get to a 1x1 texture

size_t maxDim = std::max(width, height);

pTexDesc->setMipmapLevelCount(static_cast<size_t>(log2(maxDim)) + 1);

A mipmap level is created by halving both the width and height until the smallest (either width or height) dimension becomes 1. Therefore, the number of steps is determined by the largest dimension. For a 1920x1080 texture, maxDim would be 1920. And the number of mipmap levels needed would be $\log_2(1920) \approx 10.9$ which when rounded up becomes $11$.

auto pTexture = NS::TransferPtr(pDevice->newTexture(pTexDesc.get()));

if (!pTexture) { return nullptr; }

// pixel data goes from CPU buffer to the texture

MTL::Region region = MTL::Region::Make2D(0, 0, width, height);

NS::UInteger bytesPerRow = CHANNELS * width;

pTexture->replaceRegion(region, 0, pixelBuffer.data(), bytesPerRow);

The replaceRegion(...) method here takes the raw pixel data and pushes it to level $0$. Once the code is commited, it must be read back to the main memory, this is done in the calculateAverageColor(...) method, where we must specify which index to read. pTexture->mipmapLevelCount() returns $11$. The indexing goes from ${0, \dots, 10}$ though so must subtract by one here:

MTL::Region region = MTL::Region::Make2D(0, 0, 1, 1);

// ...

size_t lastMipLevel = pTexture->mipmapLevelCount() - 1;

pTexture->getBytes(&avgColor, bytesPerRow, region, lastMipLevel);

The main() driver method is large but that's only because of the mess of dealing with file descriptors. The ffmpeg command is pretty straightforward, the most important argument here is rgba as this must correspond with our chosen schema. I wish std::system was more comprehensive, but not yet. Also, I would have loved to use std::jthread in this code but it seems my version of clang++ doesn't yet support it.

Gratitude¶

The stb library is the work of Sean T. Barrett among hundreds of others. Thank you all for your work.

Bartosz Ciechanowski's explanation on Alpha Composting is one of the best written blogs I've ever come across. If you're curious about the aforementioned rendering of subtitles on video streams, this is a must-read.

Iman Irajdoot's explanation on Texels and Mipmaps - very clear and concise explanation.